Jennifer Ellison, MSc1*, Joelle Cayen, BHSc2, Linda Pelude, MSc2, Robyn Mitchell, MHSc2, and Kathryn Bush, MSc1

1Alberta Health Services, Lethbridge, Alberta, Canada

2 Public Health Agency of Canada, Ottawa, Ontario, Canada

*Corresponding author:

Jennifer Ellison

Alberta Health Services

Lethbridge, AB, Canada

Email: This email address is being protected from spambots. You need JavaScript enabled to view it.

KEYWORDS

Surveillance; data quality; bloodstream infections; MRSA; VRE; CLABSI; competency

INTRODUCTION

The Canadian Nosocomial Infection Surveillance Program (CNISP) is a collaboration between the Public Health Agency of Canada, the Association of Medical Microbiology and Infectious Diseases Canada and sentinel hospitals across Canada [1]. In 2019, CNISP conducted nation-wide surveillance of healthcare-associated infections (HAIs) and antibiotic-resistant organisms (AROs) among 78 acute care hospitals across Canada.

The goal of CNISP is to help facilitate the prevention, control, and reduction of HAIs and AROs in Canadian acute care hospitals through active surveillance and reporting. Data are collected to measure the burden of HAIs, including AROs, establish benchmark rates for internal and external comparison, identify potential risk factors and allow for the assessment of specific interventions to improve the quality of patient care in Canadian acute care hospitals.

Standardized surveillance methods and case definitions are used for all CNISP surveillance protocols [2]. Trained infection control staff apply standardized methods and definitions outlined in the surveillance protocols to identify eligible patients for inclusion and review medical records. Similarly, hospitals that do not participate in CNISP but benchmark their internal performance against CNISP aggregate data must apply these same methods and definitions to allow for valid comparisons. The protocols and definitions were designed to maximize consistency in surveillance across hospitals and require the use of various clinical and laboratory criteria.

However, it has been suggested that standardized surveillance case definitions and protocols will not address every potential patient scenario [3]. As a result, staff may not consistently apply CNISP surveillance definitions when presented with the same scenario. Additionally, since CNISP surveillance definitions periodically undergo revision, staff may fail to apply the newer definitions, which could potentially result in misclassification of recent cases. CNISP has previously conducted reliability audits of surveillance data [4-5], however, reliability audits of healthcare-associated bloodstream infections (HA-BSIs) have not yet been performed by CNISP. To continue to improve CNISP surveillance data quality, the network undertook a case study to assess the accuracy of the application of surveillance definitions for healthcare-associated bloodstream infections. We aim to describe the validity and reliability of staff in applying CNISP surveillance definitions for HA-BSI assessed through assessment of individual case examples [3, 6-8].

METHODS

Subject matter experts, which include members of the CNISP Data Quality Working Group, chairs of the CNISP Methicillin-resistant Staphylococcus aureus (MRSA), Vancomycin-resistant Enterococci (VRE) and central-line associated bloodstream infection (CLABSI) working groups developed and validated case examples for inclusion in an online survey. The survey was developed using Voxco© and included nine case examples with 19 associated questions.

This survey was considered exempt from the requirement for ethics approval as the activities described here are outside of the scope of ethics board review as per the Tri-Council Policy Statement 2 (2018), Article 2.5: “Quality assurance and quality improvement studies, program evaluation activities, and performance reviews, or testing within normal educational requirements when used exclusively for assessment, management or improvement purposes, do not constitute research for the purposes of this Policy, and do not fall within the scope of REB review”[9]. The survey was conducted as part of routine quality assurance of our surveillance projects. All individual responses were confidential, and no results were reported in aggregate.

Staff from the 78 CNISP participating hospitals, representing 40 hospital networks, ranging from one to six individual hospitals per network, were invited to complete the case survey between September 1-30, 2019. At the time of the study within the CNISP network, there were approximately 240 active members (including infectious disease physicians, medical microbiologists, epidemiologists, and infection control professionals). However, not all active members participated in the survey given their role in CNISP surveillance. For instance, not all members apply the case definitions (e.g., data entry staff or lab technicians). The hospital networks were in one of three regions: West (British Columbia, Alberta, Saskatchewan, and Manitoba), Central (Ontario and Quebec) and East (New Brunswick, Nova Scotia, Newfoundland & Labrador, and Prince Edward Island). Since this was an educational intervention and a data quality investigation, each site could have multiple responders and staff could respond individually or as a group.

The case study assessed the ability of staff to correctly apply three key surveillance definitions for CLABSI, MRSA bloodstream infection (BSI), Methicillin-sensitive Staphylococcus aureus (MSSA) BSI and VRE BSI surveillance. First, the application of surveillance case definitions (i.e., eligibility for inclusion in surveillance), second, the application of acquisition classification (e.g., acquired at your facility, another facility or community-associated) and third, the identification of the source of infection (e.g., IV catheter-associated, primary bacteremia, skin/soft tissue, etc.). The case examples reflected some of the complex patient scenarios that staff may have encountered in their daily surveillance for healthcare-associated BSIs. All responses were kept confidential. Correct responses were reported as a proportion of all responses and data cleaning and analysis was performed using SAS, version 9.4.

RESULTS

Twenty-three of 40 (58%) CNISP hospital networks completed the survey. Among those 23 hospital networks, there were 41 individual respondents (88%) and six group respondents (12%) with a total of 723 responses to the 19 questions. Responses were received from all three regions (38% West, 53% Central, 9% East). The mean score of the survey was 88% (Median 90%, Range 54%-100%).

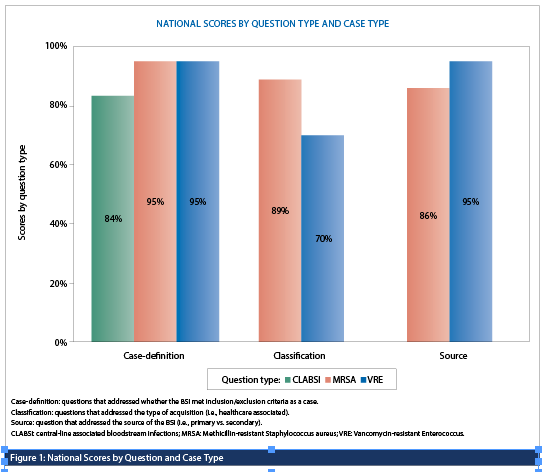

Scores were similar across all question types with 88% of responses (355/401) correctly applying the case definition for inclusion of a BSI in surveillance, 84% (136/161) correctly applying the acquisition classification and 88% (142/161) correctly classifying the source of infection (e.g., IV catheter-associated, primary bacteremia, skin/soft tissue, etc.). However, the proportion of responses that correctly applied the CNISP surveillance definitions significantly varied by module (CLABSI vs. MRSA BSI vs. VRE BSI) (Figure 1). Scores were significantly higher among responses from groups compared to individuals (96.4% vs 86.5%, p<0.05).

DISCUSSION

We assessed the accuracy of the application of CNISP surveillance definitions for healthcare-associated bloodstream infections. Overall, we found that 88% (633/723) of responses correctly applied the case definition, case classification and source of infection criteria for BSI cases. Similar case study investigations validating the application of standard surveillance criteria and protocols have been conducted by the Centers for Disease Control and Prevention (CDC) National Healthcare Safety Network (NHSN) [8] and the Texas Department of State Health Services [10]. For the NHSN investigation, between 2010 and 2016, 22 case studies were performed with correct responses selected 62.5% of the time, whereas in the Texas Department investigation, only 6% (five of 88) of participants correctly identified all elements of both case scenarios provided.

The proportion of responses that correctly applied the case classification for VRE BSI as compared to MRSA BSI was significantly lower (70% vs 89%, p<0.05) which highlights the need for a review and clarification of the case classification definition for this module as well as the provision of additional training. Feedback from stakeholders was positive and the results were very encouraging.

It was interesting, though not surprising, to identify that those hospitals submitting their responses as a group had a significantly better average score (96.4%) than responses submitted from individuals (86.5%). This may demonstrate the value of collaboration between infection control professionals to improve data quality [11-12]. In addition, trained infection control professionals who participate in CNISP likely contribute to the high overall scores by individuals and groups highlighting the quality of the data collected through CNISP.

There are several limitations to this case study. To limit the amount of time required to complete the survey, only nine case examples were included. As a result, internal reliability was not assessed, as we were not able to provide similar questions within the same survey to see if both questions would be answered in the same way. This could be addressed in future surveys that focus on only one protocol, thus enabling similar questions to be asked. We also did not look at the validity of the scenarios by enabling the staff to retake the same survey later. Holmes et al [13] suggested that case studies developed by experts in the field may not capture all potential factors used to assign case definitions. The survey had an average response rate (58%) and may suffer from selection bias, as those who are most keen and completed the survey are likely those most familiar with CNISP surveillance and so would be more likely to correctly apply the definitions. Since, the survey was sent out in the summer, this may have lowered the response rate, however, our response rate was still sufficient to evaluate data quality [14]. Future surveys could assess if infection control professionals experience was a factor in the response rate by analyzing this information according to CIC certification. Lastly, it is unknown whether these findings reflect a representative sample of staff conducting CNISP surveillance.

Despite the above limitations, this was the first case study conducted by CNISP to assess the validity and reliability of the application of surveillance definitions. The high percentage of responses that correctly applied CNISP surveillance definitions highlights the high quality of surveillance data collected through CNISP. Respondents reported that the case study was informative and served as a robust training tool. As part of ongoing data quality monitoring, the network plans to conduct additional case studies focused on other surveillance modules such as C. difficile, surgical site infection and COVID-19 surveillance.

REFERENCES

1. Canadian Nosocomial Infection Surveillance Program. (2022). Device and surgical procedure-related infections in Canadian acute care hospitals from 2011 to 2020. Canada Communicable Disease Report, 48(7/8), 325-339.

https://doi:10.14745/ccdr.v48i78a04.

2. Canadian Nosocomial Infection Surveillance Program. HAI Surveillance Case Definitions 2020. Accessed on February 20, 2023, form https://ipac-canada.org/photos/custom/Members/CNISPpublications/CNISP_2021_HAI_Surveillance_Case_Definitions_EN.pdf.

3. Wright, M.O., Hebden, J.N., Allen-Bridson, K., Morrell, G.C., Horan, T. (2010). Healthcare-associated Infections Studies Project: An American Journal of Infection Control and National Healthcare Safety Network Data Quality Collaboration. American Journal of Infection Control, 38(5), 416-418. https://doi:10.1016/j.ajic.2010.04.198.

4. Forrester, L., Collet, J.C., Mitchell, R., et al. (2012). How reliable are national surveillance data? Findings from an audit of Canadian methicillin-resistant Staphylococcus aureus surveillance data. American Journal of Infection Control, 40(2),102-107. https://doi:10.1016/j.ajic.2011.03.005.

5. Leduc, S., Bush, K., Campbell, J., et al. (2015). What can an audit of national surveillance data tell us? Findings from an audit of Canadian vancomycin-resistant enterococci surveillance data. Canadian Journal of Infection Control, 30,75-81.

6. Keller, S.C., Linkin, D.R., Fishman, N.O., Lautenbach, E. (2013). Variations in identification of healthcare-associated infections. Infection Control and Hospital Epidemiology, 34(7), 678-686. https://doi:10.1086/670999.

7. Schroder, C., Behnke, M., Gastmeier, P., Schwab, F., Geffers, C. (2015). Case vignettes to evaluate the accuracy of identifying healthcare-associated infections by surveillance persons. Journal of Hospital Infection, 90,322-326.

https://doi:10.1016/j.jhin.2015.01.014.

8. Wright, M.O., Allen-Bridson, K., Hebden, J.N. (2017). Assessment of the accuracy and consistency in the application of standardized surveillance definitions: A summary of the American Journal of Infection Control and National Healthcare Safety Network case studies, 2010-2016. American Journal of Infection Control, 45(6), 607-611. https://doi:10.1016/j.ajic.2017.03.035.

9. Government of Canada. (2018). Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans – TCPS 2. https://ethics.gc.ca/eng/policy-politique_tcps2-eptc2_2018.html.

10. Adams, J., Mauldin, T., Yates, K., et al. (2022). Factors related to the accurate application of NHSN surveillance definitions for CAUTI and CLABSI in Texas hospitals: A cross-sectional survey. American Journal of Infection Control, 50(1), 111-113. https://doi:10.1016/j.ajic.2021.07.007.

11. Kao, A.B., Miller, N., Torney, C., Hartnett, A., Couzin, I.D. (2014). Collective Learning and Optimal Consensus Decisions in Social Animal Groups. PLOS Computational Biology, 10(8):e1003762. https://doi:10.1371/journal.pcbi.1003762.

12. University of Minnesota. Benefits to consensus decision making. Published 2022. https://extension.umn.edu/leadership-development/benefits-consensus-decision-making (Accessed May 2022).

13. Holmes, K., Moinuddin, M., Steinfeld, S. (2022). Developing valid test bank of surveillance case study scenarios for inter-rater reliability. American Journal of Infection Control, 50(8), 960-962.

14. Lindemann, N. (2021). What’s the average survey response rate. Retrieved on 21 February 2023, from https://pointerpro.com/blog/average-survey-response-rate.